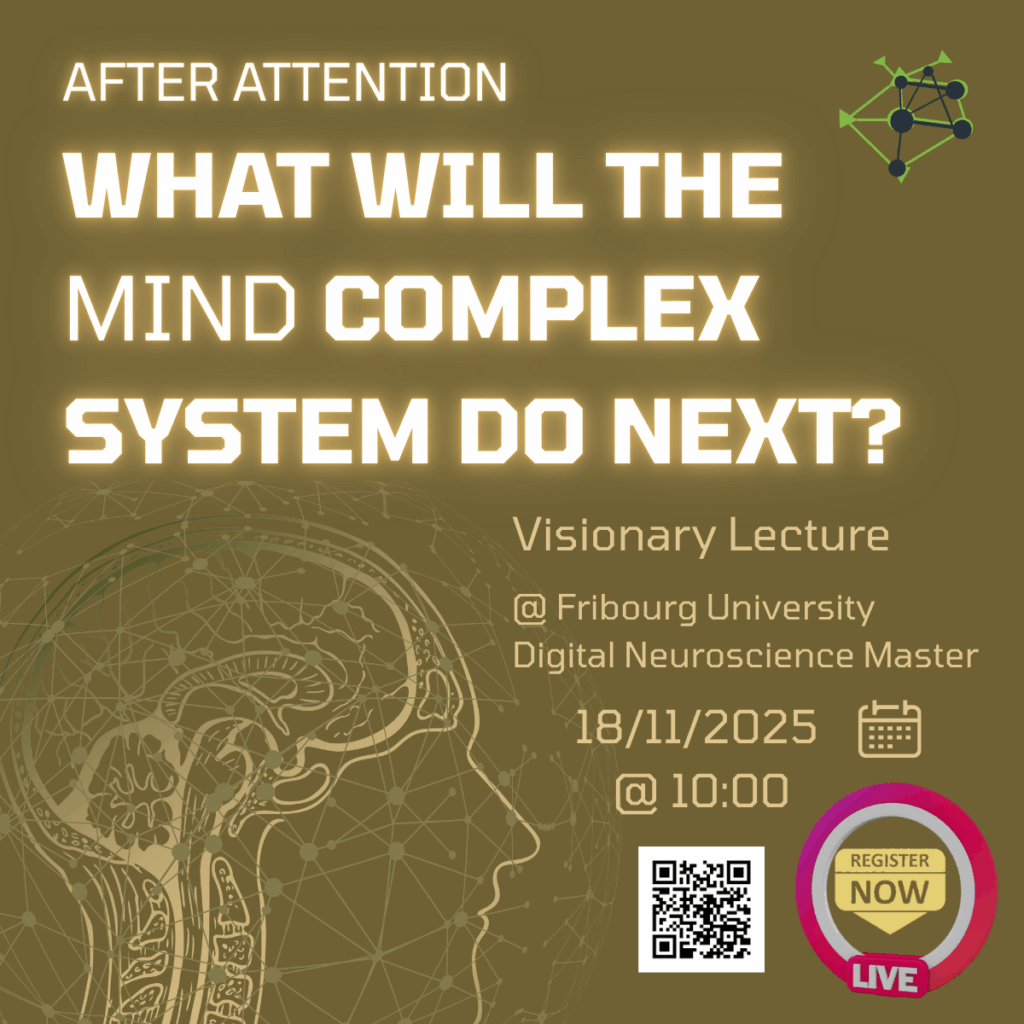

Artificial intelligence is evolving from a collection of powerful algorithms into a genuine partner for human cognition. During a recent visionary lecture, Dr. Rabih Amhaz explored this transition with a focus on multimodal AI, neuroscientific inspiration, and the operational realities of complex systems. The session unfolded as a deep dive into intelligence, human, artificial, and hybrid, and examined how modern models reshape the future of high-stakes environments such as aviation, manufacturing, and digital operations.

The lecture opened with a direct question: What is intelligence? Instead of reducing it to a single definition, the discussion embraced its full scope, philosophical, cognitive, biological, and computational. Intelligence, in the modern scientific context, is best understood as a complex adaptive system: dynamic, interactive, and deeply contextual. This became the foundation for the entire session.

From the origins of perceptrons in the 1950s to today’s transformer-based architectures, the lecture traced how AI borrowed from neuroscience in order to build systems capable of perception, abstraction, and decision-making. Reinforcement learning mirrors reward pathways. Convolutional networks echoed the visual cortex. And now, attention-based architectures treat information as structured graphs rather than linear sequences.

A significant part of the lecture addressed a practical reality: choosing an AI model is not a matter of guesswork or fashion. It requires a precise operational definition, data characterization, and an understanding of constraints, especially in safety-critical domains. Students were invited to reconstruct an AI pipeline from first principles, reinforcing the idea that model selection comes after understanding context, not before.

The discussion progressed to the limitations of traditional machine learning when dealing with non-linear, multi-agent, emergent systems such as industrial operations, cockpit behavior, or large-scale workflows. Graphs were introduced as the missing layer that binds these complex interactions into a coherent structure. This is naturally connected to transformers, which, despite their popularity, are fundamentally graph-based engines operating over tokens.

The lecture then made a decisive shift from theory to application. In collaboration with neuroscience and operational engineering teams, Rabih presented ongoing experiments combining eye-tracking, object detection. Analyzing an operator assembling mechanical parts, the goal is understanding cognitive load, detect deviations, and create adaptive support systems capable of maintaining safety and performance.

The industrial experiments revealed striking differences between organized and unorganized workspaces. Gaze heatmaps and object-interaction graphs showed how disorganization amplifies irrelevant fixations and cognitive dispersion.

These findings point towards a clear path: the future of AI is multimodal, context-aware, graph-structured, and deeply integrated with human cognition.

The lecture concluded with a sober but optimistic stance. AI today is powerful but not omnipotent, “a philosopher with experience,” capable of generating but not understanding. The real breakthrough lies in coupling structured human behavior with data-driven models. This is the emerging frontier of augmented cognition, Industry 5.0, and next-generation digital ecosystems.

This lecture was not only a tour of modern AI but a roadmap to its future, one in which humans remain central, amplified by intelligent systems capable of learning not just from data, but from the complexity of human expertise.

What is intelligence from a scientific perspective?

Intelligence is the ability of a system to perceive, process, and adapt to complex environments. It involves abstraction, pattern recognition, decision-making, and learning. In science, it is treated as an emergent property of complex adaptive systems.

How does AI relate to the concept of complex adaptive systems?

AI models absorb information, adjust internal parameters, and change behavior based on experience. These dynamic adjustments mirror how complex systems evolve. AI becomes intelligent when it can self-organize under varying conditions.

Why do different fields define intelligence differently?

Philosophy focuses on reasoning and meaning. Neuroscience focuses on biological computation and behavior. Computer science focuses on tasks, performance, and measurable output.

What distinguishes narrow, general, and super-intelligence?

Narrow AI solves specific tasks with high efficiency. General AI would integrate reasoning, abstraction, and generalization across all domains. Super-intelligence refers to a hypothetical system surpassing human cognitive capability.

How did neuroscience influence the early development of AI?

Neuroscience provided the structural inspiration for artificial neurons and layered networks. Early models like perceptrons were abstractions of biological neurons. Later learning rules reflected synaptic strengthening observed in real brains.

What are perceptrons and why were they revolutionary?

Perceptrons introduced the idea of machines learning from examples. They demonstrated how binary classification could emerge from linear decision boundaries. This created the foundation for modern neural networks.

How does Hebbian learning relate to neural behavior?

Hebbian learning states that neurons strengthen their connection when activated together. This idea models how memory and learning might form in biological brains. It inspired early learning rules in artificial networks.

What inspired convolutional neural networks?

CNNs drew inspiration from the hierarchical processing structure of the visual cortex. They mimic receptive fields that detect local patterns. This architecture allows models to extract features efficiently from images.

How does reinforcement learning reflect biological reward systems?

Reinforcement learning uses reward signals to guide behavior improvement. This mirrors dopamine pathways that reinforce successful actions. Policies emerge through trial, error, and feedback loops.

Why is model selection impossible without a clear operational problem?

Different problems require different data structures and constraints. Without defining the task, every model appears plausible but none is optimal. Precise formulation enables effective trade-offs.

What steps define a rigorous AI project pipeline?

First, the operational problem must be defined precisely. Then data must be characterized, processed, and validated. Finally, models are trained, evaluated, and integrated into the operational workflow.

How do we characterize the structure and geometry of data?

Data may follow Euclidean formats such as images or non-Euclidean structures like graphs. Understanding geometry determines the mathematical tools to use. Poor characterization leads to inappropriate model design.

What constraints must be evaluated in industrial or medical AI applications?

Safety and explainability are mandatory. Hardware limitations and real-time requirements shape architectural choices. Regulatory and ethical constraints restrict deployment pathways.

Why is multimodal data essential for complex decision-making?

Single signals only capture one dimension of a cognitive or operational event. Multimodal fusion uncovers richer patterns and interactions. This reduces ambiguity and increases reliability.

How do supervised, unsupervised, and reinforcement learning differ operationally?

Supervised learning maps labeled inputs to outputs. Unsupervised learning extracts structures and clusters without labels. Reinforcement learning optimizes decision policies based on rewards.

What makes a system “complex” in the scientific sense?

A complex system arises from many interacting components that generate emergent behavior. Its dynamics are non-linear and impossible to predict from individual parts alone. The whole exhibits properties not present at the micro level.

Why do traditional neural networks struggle with non-linear multi-agent systems?

They assume stationary patterns that do not hold in evolving environments. Interactions between agents create relational structures that standard models cannot encode. Graph or interaction-aware models handle such complexity better.

What role do graphs play in modeling real-world complexity?

Graphs represent entities and their relationships clearly. They capture structure, dependencies, and multi-agent interactions. This enables reasoning aligned with natural system behavior.

How do graph neural networks process interactions and relationships?

GNNs propagate information between connected nodes through message passing. They update node states based on local neighborhoods. This process reflects relational reasoning directly encoded in data.

Why are transformers essentially graph architectures?

Attention mechanisms dynamically link every token to every other token. This creates a fully connected, weighted graph during computation. Transformers operate by learning these graph structures at scale.

How does attention create dynamic connections between tokens?

Attention computes similarity scores between all token pairs. These scores determine information flow across positions. The model builds contextual meaning by focusing on relevant relationships.

Why is tokenization a universal representation layer for diverse modalities?

Tokenization reduces complex signals into manageable discrete or continuous units. Transformers can process these units independently of their origin. This allows text, images, proteins, and actions to share an architectural backbone.

What is the relationship between graph learning and multimodal AI?

Multimodal data naturally forms interconnected structures. Graphs unify these structures for reasoning. GNNs and attention models allow joint learning across modalities.

How do we capture cognitive load using AI tools?

Eye-tracking metrics reveal attention patterns and effort. Physiological signals like EEG reflect internal cognitive states. Combining them yields reliable cognitive load estimation.

What insights can eye-tracking provide about human behavior?

Fixation duration shows depth of processing. Scan paths reveal strategy and search patterns. Deviations often signal confusion or overload.

How does workspace organization influence cognitive performance?

A disorganized workspace forces unnecessary search and re-orientation. This increases cognitive cost and fixation scatter. Organized setups streamline perceptual and motor decisions.

How do object detection and fixation data combine to reveal operator strategy?

Object detection identifies what users look at. Fixation patterns determine how long and when interactions occur. Together they map operational workflows in real time.

Why do experts maintain stable gaze patterns under stress?

Experts rely on internalized mental models. Stress does not disrupt their stable patterns because tasks are automated through skill. Their gaze becomes a marker of robust experience.

What makes novices more susceptible to cognitive dispersion?

Novices lack stable schemas for tasks. They distribute attention broadly, searching for cues. This creates scattered fixation maps and slower decision-making.

What is the future of human-AI coupling in high-risk environments?

It will shift toward adaptive, context-aware assistants. These systems will stabilize attention and reduce cognitive load. The goal is resilience, not automation replacement.

Why is explainability critical in operational AI?

Operators must understand why a system issues a warning. Transparent reasoning builds trust in critical environments. Explainability prevents blind reliance and supports accountability.

Why does Industry 5.0 rely on augmented human performance?

It recognizes humans as central actors in complex systems. AI enhances, not replaces, their decision-making capabilities. Collaboration becomes the core operational paradigm.

Why does a fully data-driven AI approach fail without structured context?

Data alone lacks meaning unless anchored to tasks. Models may learn correlations instead of causal relations. Context restores operational relevance.

What does it mean for a transformer to create an internal graph of meaning?

Each token interacts with all others through attention weights. These interactions form a dynamic relational graph. Meaning emerges from the learned structure of these connections.

How can graph-based abstractions unify neuroscience and AI modeling?

Both biological and artificial systems rely on interconnected networks. Graphs represent dependencies across nodes and regions. This creates a shared mathematical language for both fields.

Why are multimodal pipelines more robust than single-signal systems?

Each modality compensates for the limitations of others. Noise or failure in one stream does not collapse the system. Fusion increases accuracy and resilience.